This post was drafted in response to journalists’ questions (e.g. from Wiener Zeitung and Forbes) in occasion of a week-long AI & Ethics seminar and of the ‘State of AI’ panel discussion i was invited to. Shame they asked me before James Mickens gave his phenomenal Usenix2018 keynote on ML, IoT et al. . I would have simply directed them to it.

QUESTIONS

- Q1: In addition to a Universal Declaration of Human Rights, do you need a Universal Declaration of cyborg / robot rights?

- Q2: Who has to be protected from whom?

- Q3: What importance do programmers have in the future?

- Q4: How can one ensure that there is a universal catalog of ethical behavior in artificial intelligence?

- Q5: Do man and machine merge?

- Q6. What are the future challenges in coping with artificial intelligence and transhumanism?

- Q7: Have we arrived in the first phase of transhumanism?

- Q8: is it time that, alongside the IT industry, more and more humanities scientists are involved in the questions of the technological future?

Original list of questions

CLARIFICATIONS: What is AI?

First, let’s clarify what we mean by Artificial Intelligence. AI is an umbreall terms for a number of related technologies and field of studies. Suchman‘s description of AI is a good starting point: “AI is the field of study devoted to developing computational technologies that automate aspects of human activity conventionally understood to require intelligence”. The words ‘conventionally understood’ are key.

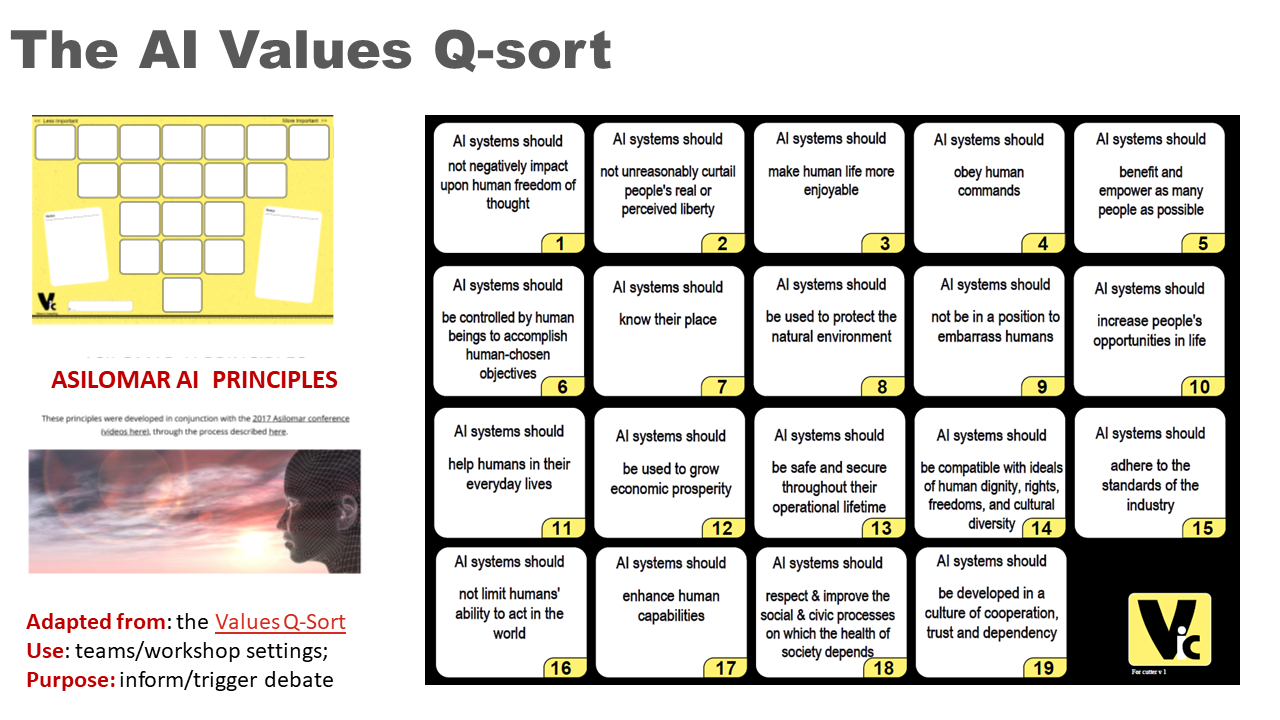

In the last 20 years, this field of study has been focused on the “construction of intelligent agents — systems that perceive and act in some environment. In this context, the criterion for intelligence is related to statistical and economic notions of rationality — the ability to make good decisions, plans, or inferences” (AI open letter 2015).

The combination of access to big data, increasing computing process power, and market promises has vastly accelerated AI development in the last two decades. The ‘criterion for intelligence’ is here linked to rational thinking as defined by statistics and economics – e.g. in terms of utility, and performance.

This means that, once humans have designed the problem space in which machine intelligence operates, this will act rationally. Rationally does not necessarily mean fairly and considerately.

APPROACH

To better answer the questions, I have made the distinction between ‘Intelligent agents type A’ and ‘Intelligent agents type B’, this distinction is fictional, and broadly overlaps with the distinction between ‘narrow’ and ‘general AI’ . Put it simply, it focuses on what is available now and what we do not have yet, but may have in the future.

- Intelligent agents A – this is what we have now, systems that use AI computational techniques (e.g. machine learning) for a variety of tasks with some specific goal (e.g. speech recognition, image classification, machine translation). These component tasks can be used in isolation or combined. Examples include image recognition for cancer detection, and data models for diabetes prediction.

- Intelligent agents B – this is what we do not have yet, but it is a the centre of much media attention: an engineered non-biological sentient entity (i.e. synthetic, hybrid) equipped with General AI unbounded capabilities. In other words, an entity engineered to successfully perform – and surpass – any human intellectual task.

I would not say that is not possible to build a ‘type B’ intelligence, but I’d question the values underpinning such desire.

ANSWERS TO JOurnalists’ QUESTIONS

The journalists’ questions were many and complex. I thought it best to reason about them with ‘team ViC’. Below are my personal reflections, informed by team discussions. As an experiment, each of us also gave “one-word” answer to each of your questions. I have summarized them at the start in italic.

Q1: In addition to a Universal Declaration of Human Rights, do you need a Universal Declaration of cyborg / robot rights?

No. (not until)

Given our Human Rights track record, we should not build ‘type B’ machines that need to be granted rights until the rights of every single human being is respected. Also granting an ‘electronic person’ status to a machine, may be just another way for lifting tech industry from their responsibilities.

The Universal Declaration of Human Rights (UDRH) is a testament to both human ‘kindness’ and to our struggle to honor our very own rights. We, as human species, are currently in breach of every single UDRH article. Furthermore, old problems not only seems to stay, but they seem to morph and grow in scale, for example:

- Slavery is rife and on the rise. There is now an estimate 40M people in slavery (vs 30M in 2013). Slavery has morphed into more subtle, hidden and deeply pervasive new forms.

- Wars still ravage many of our nations, and the trend is upwards. AI development and the military are historically intertwined and problematically so (e.g. the 4000 Google employees project Maven walk out).

- Inequalities both social and economic are widening and it is happening at our door step. Research has found that 1 in 3 children in Britain lives in poverty and that this is a raising trend.

How can we be capable of respecting entities that are not ‘us’ when we are not good at respecting our own rights? Or, in reverse, are we sure that we can build machines that will respect humans? Some may dream up of Sophia, but it is Mary Shelley who seems to be currently stealing the show.

Many top AI researchers argue that we should not grant machines the status of an electronic person – to make machines responsible of their actions (good or bad) would mean that their designers and manufacturers could be lifted from their responsibilities. What would you do if a machine does something wrong? Fine it? Put it into jail?

Q2: Who has to be protected from whom?

Humans from Humans

(See Q1 above)

Q3: What importance do programmers have in the future?

Much and Hardly Any

Perhaps too much focus is placed on individual developers’ responsibilities, whereas these responsibilities are often distributed. More emphasis should be placed, firstly, on the importance of investigating the changes that computational-intensive technologies are bringing to society, starting by reflecting on our own individual lives. Secondly, on questioning whether these changes are desirable or not, and, most importantly, desirable to whom. Desirability is a difficult nut to crack, Russel et al. try to explain why.

Q4: How can one ensure that there is a universal catalog of ethical behavior in artificial intelligence?

One Can Not

Philosophically, this assumes the possibility (and desirability?) of Universal Ethics to be used by machines. Ethics are codified principles of what a society, organisation, or any other human constituency considers right or wrong; behaviour is situated, contextual, and salient. Formalised Universal Ethics do not guarantee ethical behaviour because it is context-dependent, particular, and volatile.

Technically, we can improve the transparency and externalization of machine reasoning. For example, research in autonomous systems is looking into externalizing the reasoning underpinning their behavior, in this paper, they describe their technical challenges.

Q5: Do man and machine merge?

Done.

Man and machine have long merged, the biggest merge is with the Internet, to which we are constantly and collectively connected. Individually, we also have pace makers, defibrillators, insulin pumps – complexity and scale will increase, but fundamentally the merge has already happened. Cybernetics, is about technology-driven systems control. I have seen ‘dying’ ravaged by a simple pacemaker, what could the implications be – for both the living and dying – of intelligence augmentation ?

Q6. What are the future challenges in coping with artificial intelligence and transhumanism?

Batteries, jobs, children.

Firstly, the intelligent machines of which we speak, need power, energy, ‘food’. How will they be fed? Who will feed them? Bio-fuel crops already compete with human foodstuff production, and predictions indicate that communication industry is to consume more than 20% of world electricity by 2020.

Secondly, much focus is placed on human / machine competitiveness in the job market. I’d also keep a closer eye on the roles that humans have and should have in society, how those may get eroded (e.g. the joy and opportunities to exercise creativity and problem solving, care for children, care for the elderly).

These are roles that, I’d argue, define the very essence of being human. Is trans-humanism really what most of us want for our children? Is that what our older selves want?

Q7: Have we arrived in the first phase of transhumanism?

Potentially

There is certainly an increased desire for trans-humanism, and where there is a will, there is a way. Human history has been shaped by the desire and reverence for ‘entities’ that transcend us. When such entities cannot be found, we imagine, evoke, and, eventually build them.

Q8: is it time that, alongside the IT industry, more and more humanities scientists are involved in the questions of the technological future?

Maybe too late

Humanities have been for long involved in these questions. Historians and philosophers have been working on pattern recognition for centuries, and noted that at times it works and other times it doesn’t.

QUESTIONS ABOUT AI & ETHICS (original list)

- In addition to a Universal Declaration of Human Rights, do you need a Universal Declaration of cyborg / robot rights?

- Who has to be protected from whom?

- What importance do programmers have in the future?

- How can one ensure that there is a universal catalog of ethical behavior in artificial intelligence?

- Is not it easier to teach artificial intelligence an ethical framework along which decisions are made?

- Do man and machine merge?

- Which social, ethical, cultural and political consequences arise from this?

- Are there technical or biological. Limits of the merger, where are they going so far?

- Why do not you talk about cyborgs anymore?

- How human has AI to be? Is human society ready for AI?

- What are the future challenges in coping with artificial intelligence and transhumanism?

- Have we arrived in the first phase of transhumanism?

- Is it time that, alongside the IT industry, more and more humanities scientists are involved in the questions of

the technological future?

OF MEDIA

“Fear sells, and articles using out-of-context quotes to proclaim imminent doom can generate more clicks than nuanced and balanced ones.” {AI Q&As}. Media should support mature and informed conversations on this topic. This blog post has been drafted as a basis for conversation with the media.

(MY) research context

I work at the intersection of human computer interaction (HCI) and software engineering (SE). My research focuses on human values in computing, particularly values in software production, but not specifically in AI.

My expertise is in applied digital innovation (i.e. in health, environment, social change). I examine the role and impact of digital innovation on society (and vice-versa), often through rapidly prototyped technologies. I have also put some narrative about my background below to furthercontextualize my answers.

MARIA angela’s BACKGROUND:

I did Philosophy and Social Psychology as my first degree/masters in Italy (Universita’ Cattolica, Milano). Two weeks after my Viva, I left Italy for Ireland to learn English. I only planned to stay for three months. A year after I was awarded a place on a MSc in Multimedia Systems Design, at the Engineering Department of Trinity College Dublin, Ireland; after that I was offered the PhD at the Computer Science Department, University College, Dublin.

My PhD was in a branch of AI (Case Based Reasoning); it was 15 years ago. Immediately after my PhD I left academia and the field precisely because I felt uncomfortable with the research domain and its applications (i. e. user profiling for commercial purposes). After that, I worked as a project manager for a EU agency. The focus was on peace building and reconciliation through technology and economy development of EU crossborder regions.

Fast forward several year, and I am back in academia as a lecturer in computer science. Underpinning my research, is a passion for understanding the interplay between human values and computing. This comes from years of working on digital innovation research partnerships with vulnerable parts of our society.

AI Values Q-Sort, a

AI Values Q-Sort, a